Crazy It Guy

Thursday, June 12, 2025

Monday, March 17, 2025

The frustration of other thing and my Garage.

My Garage, one huge mess.

- Even though it's a 5/6-car garage, I can just about fit 2 cars inside.

- I don't really have room to any projects, once the two cars are in.

- I want to start work on a habitat and truck, that's 7.5m x 2.5m, so completely impossible, currently.

In short: Too much going on. Need to concentrate on one or two things to get those finished, but as always with ADHD, having problems focusing on one task.. :-( Story of my life, I fear..

Wednesday, January 22, 2025

Ideas are finally taking shape!

A couple of important restrictions/requirements.

1. Garage height: 2.7m (2.55m at the door)

2. Garage length, max ~7.5m

3. Must be able to carry 2 adults and 2 dogs or 4 adults in a pinch.

4. Must not have a 'tilting cab'.

5. Toilet and shower must be of 'normal size'.

6. Ideally, a washing machine/dryer combo should fit.

Found it:

Stats:

Modifications:

1. Remove pump from front bumper and replace by a winch, should save ~30cm in length? Maybe even more2. Shorten cab by ~1m to create more space for the box.

3. Convert to 'singles' tires (https://rundhauber.eu/wielen-en-banden/ , https://rundhauber.eu/banden-4-de-finale/). Could be difficult, as the rundhauber has rims with 8 holes, rather than the more usual 10 holes at Mercedes. Would like 'sprengringfelgen' to enable easy removal of tires from wheels. Also the hutchinson runflat would be very cool: https://vrakking-tires.com/20-inch/913-1000vx20-jamak-wheel-8-holes-et-90.html and https://vrakking-tires.com/run-flat-protection/528-run-flat-system-3-pieces-hutchinson.html with beadlock protection https://vrakking-tires.com/run-flat-protection/621-711-beadlock-tire-protection.html#/21-quality-new (enabling you to run lower pressures, down to 0 bar?)

Tuesday, October 8, 2024

Wednesday, July 3, 2024

Fixed it, up & running!

The sweet 'smell' of succes.

Finally just caved and got a 'new' motherboard (new to me). Put my two E5-2630L v4 (ES) processors in. Works like a charm,

Wednesday, September 6, 2023

ChatGPT actually gave some useful suggestions!

ChatGPT actually gave some useful suggestions!

Thought I'd share them here.

Physical impacts can cause a range of issues, from visible damage to subtle problems that only manifest under specific conditions. Here's a systematic approach to surveying the motherboard for damage:

1. **Visual Inspection**:

- **Magnifying Glass or Loupe**: Use a magnifying glass or a loupe to inspect the motherboard closely. Look for any visible cracks, broken traces, or damaged components.

- **Check Connectors**: Ensure that all connectors, including the PCIe slots, are intact and not bent or broken.

- **Inspect Solder Joints**: Look for any solder joints that might have cracked or become loose due to the impact.

- **Check for Debris**: Ensure there's no debris or foreign objects lodged between components or traces.

2. **Physical Inspection**:

- **Press Gently**: Gently press down on various parts of the motherboard to see if there's any unusual movement, which could indicate a broken stand-off or mounting point.

- **Check the Back**: Turn the motherboard over and inspect the back for any signs of damage or unusual marks.

3. **Functional Testing**:

- **Power On**: If the visual and physical inspections don't reveal any issues, consider powering on the server. Listen for any unusual sounds and observe any irregular LED patterns.

- **BIOS/UEFI Check**: Access the BIOS/UEFI and check for any hardware errors or warnings. Some systems run a self-test during startup and might report issues.

- **PCIe Slot Test**: Since the impact dislodged a card from the PCIe slot, test that specific slot with a known working card to ensure it's still functional. Also, test the previously dislodged card in another slot or system to ensure the card itself isn't damaged.

- **Stress Testing**: Use software tools to stress-test the system. Tools like Prime95, Memtest86, and FurMark can help identify issues with the CPU, memory, and GPU, respectively.

4. **Check Peripheral Components**:

- **RAM**: Ensure that all RAM modules are seated correctly. Consider running a memory test to ensure they're functioning correctly.

- **Storage**: Check that all storage devices (HDDs, SSDs) are securely connected and are recognized by the system.

- **Cooling**: Ensure that all fans are operational and that heatsinks are securely attached.

5. **External Inspection**:

- **Case and Chassis**: Check the server case and chassis for any signs of deformation or damage. Ensure that the motherboard is securely and correctly mounted within the case.

6. **Seek Expert Assistance**:

- If you're unsure about any findings or if the server behaves erratically, consider seeking assistance from a professional or a specialized repair service. They might have specialized equipment, like multimeters or oscilloscopes, to diagnose issues more accurately.

7. **Document Everything**:

- Take photos of any visible damage or irregularities. This can be useful for insurance claims, future reference, or if you decide to seek professional assistance.

Remember, even if the server appears to function normally after the impact, there could be latent issues that might manifest later. It's essential to be thorough in your inspection and testing to ensure the server's reliability and longevity.

Tuesday, September 5, 2023

ML350 G9, the continuing saga.

ML350 G9, the continuing saga.

Part I: received the server, box was pretty 'bashed up'.

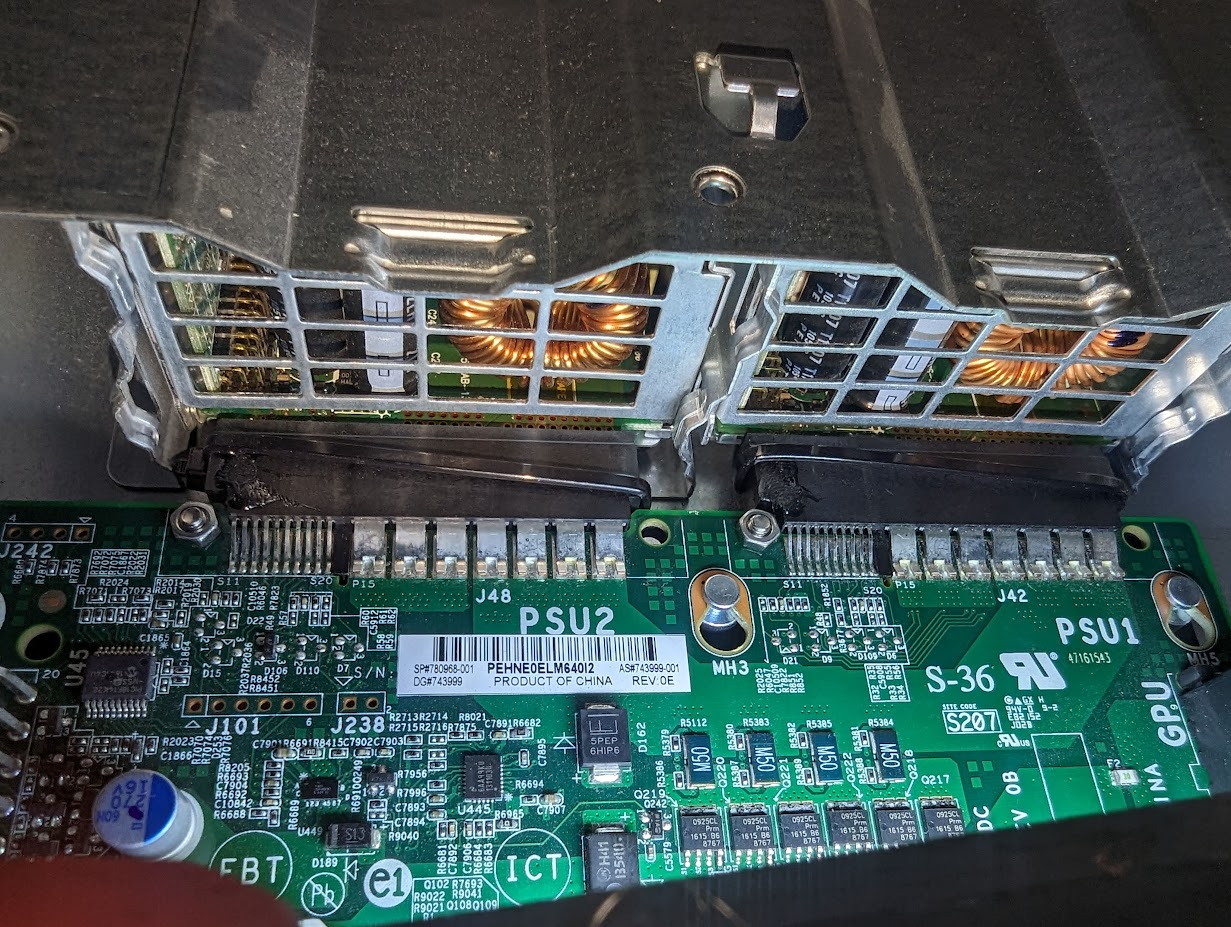

The case was pretty 'bashed up', it had had a hard impact into the power-supplies (probably used to rest the case on the ground, by the delivery guys).

I've since replaced the power-board too, no luck so far. The same error keeps popping up. It's about an EFUSE (20h), but I have no idea where that is, I suspect it might be protecting the PCIe slots (maybe some of the pins have shorted?) but I have no idea where to look.

So: Motherboard first, then some 'flex' power supplies. Let's see where this goes.

And my own post on Reddit describing my 'pains' with the server board: https://www.reddit.com/r/homelab/comments/168o7ib/help_me_resurrect_my_ml350_g9/